Dynamic programming and reinforcement learning

Image by: DrSJS

Image by: DrSJS

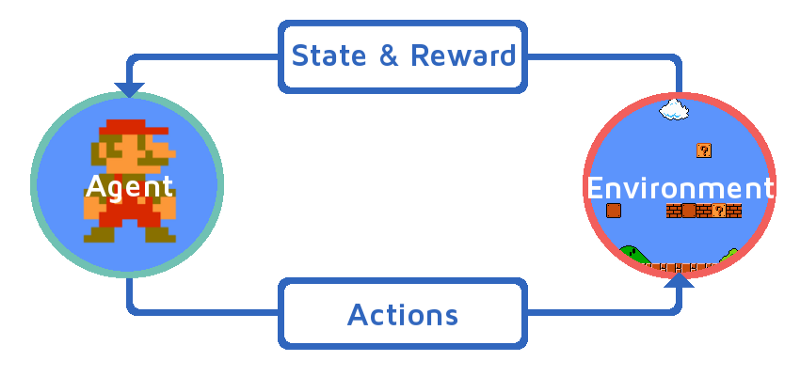

Dynamic programming is a technique that allows the modeling of sequential decision problems under uncertainty. A decision maker (also called a controller) interacts with a system, which can assume different states over time. Decisions made affect the future states of the system, whose evolution over time is unpredictable (also called stochastic).

For each decision, the decision maker receives a reward (or incurs a cost). The purpose of dynamic programming is to obtain an optimal policy (a set of decision rules) in order to maximize the total reward or minimize the total expected cost over the planning horizon.

The technique is alternatively called reinforcement learning in the community involved with machine learning, and has been promisingly applied in the areas of robotics and games. In the this case, the decision maker is called an agent, while the system is referred to as the environment.

In this project, we study the modeling of stochastic sequential decision problems that occur in production, logistics and transport. The problems are addressed using approximate dynamic programming techniques and reinforcement learning.

We have been testing statistical models and neural networks as approximators to the value functions or direct approximation of policies. For training, we have been working with algorithms based on the stochastic gradient method, Kalman filters and bayesian learning.

The deep reinforcement learning technique has also been explored, in which deep neural networks are used as approximators for value functions and policies. This technique allows the representation of states through unstructured data formats, such as images and text.

Project team

Anselmo R. Pitombeira Neto (Coordinator)

Arthur H. F. Murta (Graduate student)

Vitória I.T. Mendonça (Graduate student)

Rodrigo F. Meneses (Undergraduate intern)